Background

During the iteration of GreptimeDB, we encountered metadata incompatibility that required downtime maintenance. To avoid tedious manual interventions, we decided to introduce an automated process to facilitate the upgrade procedure. Specifically, for scenarios where metadata is incompatible, we design corresponding automated workflows to ensure the smooth progression of the upgrade. These workflows include data backup, downtime maintenance, upgrade verification, and more. Adopting this automated system can facilitate GreptimeDB's upgrade process, minimizing both downtime and the risks associated with manual errors.

Cloud-based Upgrades with Argo CD and Argo Workflows

From day one, for GreptimeCloud—a fully-managed DBaaS service specializing in time-series data storage and computation—we have embraced cloud-native technologies. Utilizing the strategy of IaC (Infrastructure as Code), we've transformed resource configurations into code, aiming for more efficient deployment and resource management. At the same time, by synchronizing with the Github repository through Argo CD, we can rapidly deploy resources into Kubernetes clusters.

For GreptimeDB's upgrade strategy, we've also harnessed the advantages of Argo CD and Argo Workflows to seamlessly achieve automated software upgrades.

Argo CD Resource Hook

The Argo CD Resource Hook is a mechanism designed to execute customized operations throughout an application's lifecycle. These hooks can be associated with application deployment, synchronization, or other lifecycle events. Therefore, utilizing Resource Hooks allows for the execution of custom logic during specific events.

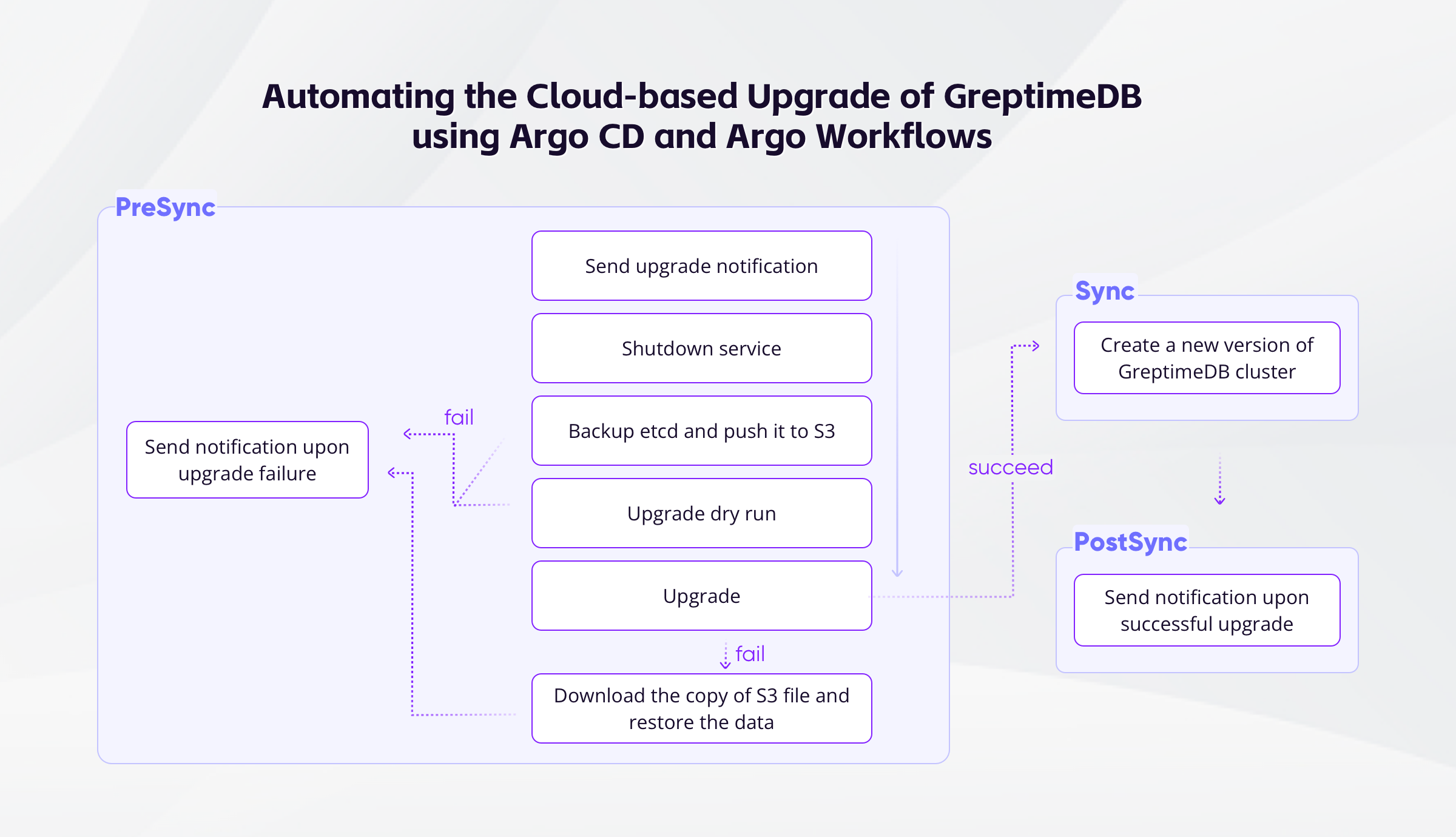

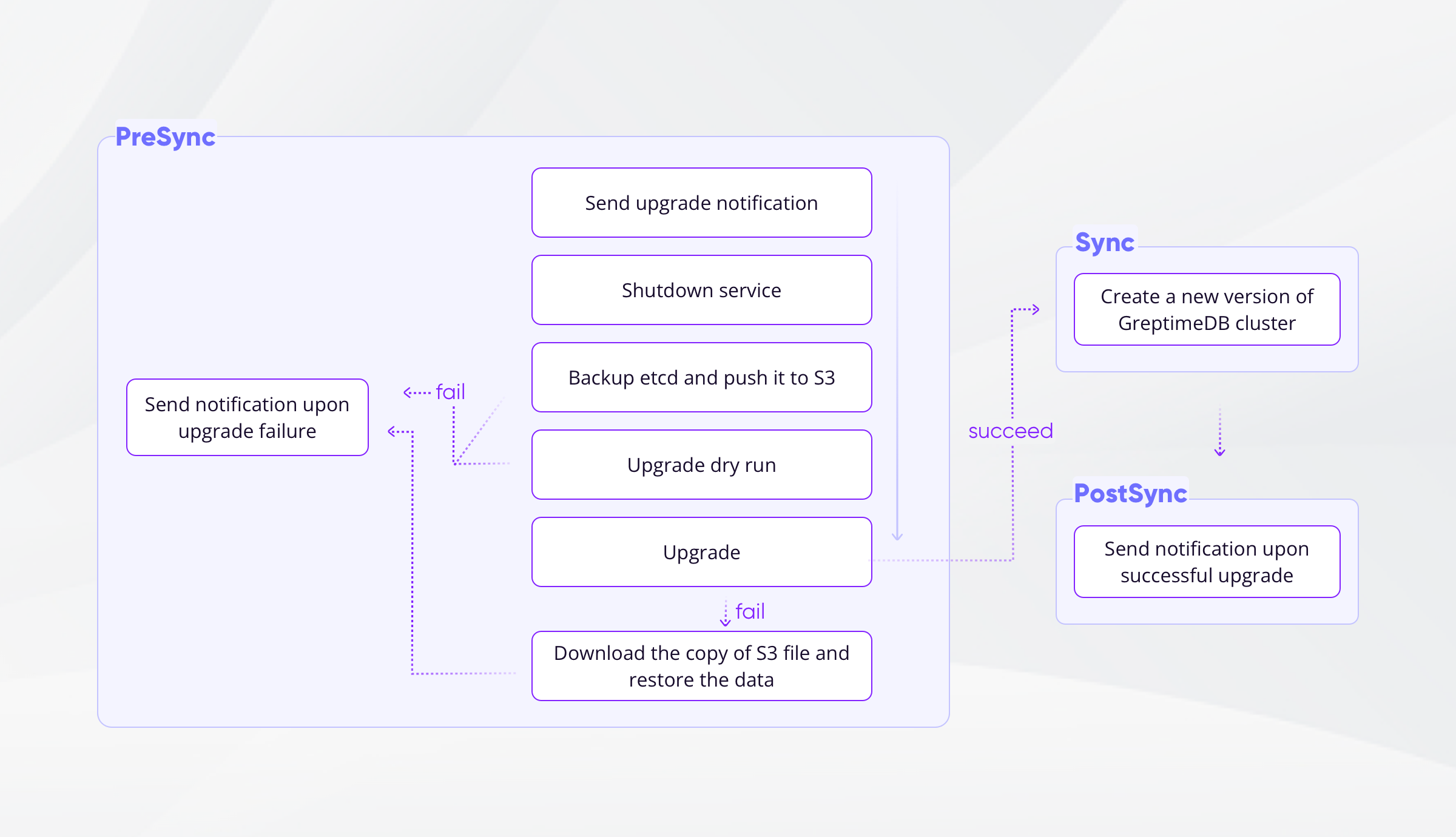

During the GreptimeDB upgrade, we implemented the following hooks:

- PreSync Hook: Executes before application synchronization. It can be utilized for tasks such as executing scripts, conducting validations, or data backup procedures.

- Sync Hook: Executes during the synchronization process. Ideal for sync-related tasks, including service start-ups or application status checks, etc.

- PostSync Hook: Executes after application synchronization. Suitable for follow-up actions like notifications or other post-processing tasks.

To use a Resource Hook, annotations need to be defined within the resource. Here’s an example employing a PreSync Hook:

apiVersion: batch/v1

kind: Job

metadata:

name: presync-hook

annotations:

Argo CD.argoproj.io/hook: presync

spec:

template:

spec:

containers:

- name: presync-hook

image: image

command: ["./run.sh"]

restartPolicy: OnFailureArgo Workflows

Argo Workflows is an open-source workflow engine geared towards orchestrating and executing tasks and workflows. Built on Kubernetes, it uses a declarative methodology for workflow definition, simplifying workflow creation and management.

Key features of Argo Workflows include:

- Declarative Workflow Definitions: Crafted in YAML or JSON formats, workflows are described by defining tasks and their interdependencies.

- Parallel Execution & Control Flow: Supports concurrent task execution and provides versatile control flow mechanisms like conditionals, loops, and branching.

- Conditional & Scheduled Triggers: Workflows can be initiated based on specific conditions or schedules.

- Advanced Task Orchestration: Offers advanced task orchestration capabilities like fault tolerance, retry strategies, and task output transmissions, etc.

- Visualization & Monitoring: Comes with user interface and command line tools for a graphical display of workflow statuses and progress, enabling real-time monitoring and the viewing of the tasks' log and output.

In this upgrade, we adopted a more refined and flexible approach to orchestrate upgrade tasks. By defining two types of workflows: the PreSync Workflow and the PostSync Workflow, it allows for enhanced control and management over each stage of the upgrade process, ensuring its controllability, reliability, and efficiency.

Upgrade Procedure

When upgrading the GreptimeDB cluster, it's imperative to adhere to a sequence of steps to guarantee a seamless upgrade. Below, we delineate the service upgrade procedure and offer a comprehensive description of each phase:

- Code Configuration Modification: Update the image version of the GreptimeDB cluster to the latest within the code configuration. Submit the modified code as a pull request and merge it into the GitHub repository.

- The PreSync Workflow encompasses the steps below:

- Send Upgrade Notification: Send a notification to the team members indicating the onset of the upgrade process.

- Service Shutdown: Halt the services of the GreptimeDB cluster, ensuring no incoming requests penetrate the system.

- etcd Backup: Backup the etcd database to keep a copy of the data before the upgrade.

- Upload the Backup Data to S3: Upload the etcd backup data to an Amazon S3 storage bucket, fortifying the data's security and restorability.

- Upgrade Dry Run: Prior to the formal upgrade, conduct a Dry Run to simulate the upgrade process and pinpoint potential issues.

- Upgrade: Carry out the actual upgrade of the metadata of the GreptimeDB cluster.

Failure Rectification: Should anomalies arise during the upgrade, one can execute an etcd recovery operation, reverting the etcd database to its pre-upgrade status.

Sync: Initiating a new version of GreptimeDB cluster.

PostSync Workflow: Send a success notification to team members.

Workflow Annotations: Integrate the annotation

Argo CD.argoproj.io/hook-delete-policy: HookSucceededinto the workflow. This ensures Argo CD automatically expunges the workflow upon its successful completion.

Feature Plans

By integrating the Argo CD Resource Hook with Argo Workflows, we've successfully achieved the automated upgrade process for GreptimeDB on the cloud. This has significantly enhanced the efficiency and reliability of our upgrade procedures. However, we're not stopping there. Looking ahead, we aim to continue exploring and innovating to refine this process, ensuring its comprehensiveness and stability. Some of the enhancements we're considering include:

Downgrade Operation: Beyond just upgrades, we plan to introduce a downgrade feature, allowing us to roll back to previous versions when necessary. This will offer us greater flexibility and fault tolerance.

Data Verification Pre and Post Upgrade: To ensure that the upgrade process doesn't compromise data integrity, we intend to conduct data verification checks before and after an upgrade. This will involve validating data completeness and comparisons to guarantee that no data is damaged or lost following an upgrade.

Read-Write Tests After Upgrades: To assess the performance and stability of the system post-upgrade, we will conduct read-write tests, which include read-write operations simulating real-world scenarios. Through these tests, we can gauge the impact of upgrades on the system and address potential issues promptly.

We firmly believe that continuously refining GreptimeDB's upgrade process and striving for optimal cloud-based upgrade automation can provide developers and users with a smoother and more reliable experience. If you're passionate about this domain or have any suggestions, we warmly invite you to join our Slack community for questions and discussions.