As we close another year, it's remarkable to reflect on how GreptimeDB has flourished, marking its first anniversary as an open-source project. This journey has been filled with learning curves and invaluable insights. Excitingly, we're announcing the launch of GreptimeDB v0.5, a significant leap forward for our open-source time-series database.

This version is particularly notable for the introduction of Remote WAL and Metrics Engine. These enhancements are pivotal in fortifying GreptimeDB's distributed capabilities and efficiently managing the resource demands posed by numerous small tables in monitoring environments.

Stay tuned as we delve into the key upgrades that define GreptimeDB v0.5, setting a new benchmark in our continuous evolution.

GreptimeDB v0.5 Updates

Key Features

Remote WAL

Currently, GreptimeDB utilizes local storage for its Write-Ahead Log (WAL), lacking disaster recovery capabilities. A distributed (Remote) WAL is foundational for GreptimeDB to implement advanced distributed functions like Region Migration, Rebalance, Split/Merge. These features are also key characteristics for achieving horizontal scaling and data hotspot splitting.

For our first Remote WAL version, instead of reinventing the wheel, we've chosen Kafka for building our initial Remote WAL storage service, considering its high-performance append-only writing style. Kafka provides a pub/sub-topic interface, with each topic linked to a message stream. The message streams are organized into inventories (also called partitions here) within the filesystem. Each directory maintains several segment files.

Writing a message to a specific topic in Kafka involves appending it to the end of the most recent segment file in a partition of that topic. This append-only method of writing enhances Kafka's performance, a key reason we chose Kafka as our Remote WAL storage service. For the Kafka client, we use rskafka, a purely asynchronous client implemented in Rust.

This first implementation of Remote WAL lays a strong foundation for robust distributed capabilities in GreptimeDB, and we will soon release the Region Migration feature built on top of the Remote WAL.

Metrics Engine

Unlike typical observability-focused time-series databases like Prometheus and VictoriaMetrics, GreptimeDB's table design is notably more complex and resource-heavy. This complexity poses challenges in managing a multitude of small tables using its default engine.

To tackle this, GreptimeDB's version 0.5 introduces the Metrics Engine, tailored to efficiently handle numerous small tables, similar to scenarios involving Prometheus-style metrics. This engine leverages synthesized wide tables, enhancing metric data storage and metadata reuse. This approach lightens the table load significantly, addressing the bulkiness inherent in the Mito engine.

The Metrics Engine is designed to address issues caused by numerous small tables in monitoring scenarios, aiming to minimize resource use and latency problems. This initiative marks the beginning of an extensive journey. In the short term, we're focusing on further optimizing resource usage, accelerating table creation processes, and boosting PromQL query performance for this new engine.

As we continue to update GreptimeDB, you can anticipate ongoing enhancements and refinements in the Metrics Engine.

Key Updates in Other Modules

- PromQL Compatibility Boosted to 82%

There's been significant progress with PromQL, where its compatibility has increased to 82%. This includes support for the histogram_quantile function, alignment with linear_regression behavior, and implementation of PromQL's AND and UNLESS operators.

For more details, refer to this issue.

- Ongoing Optimization of Mito Engine

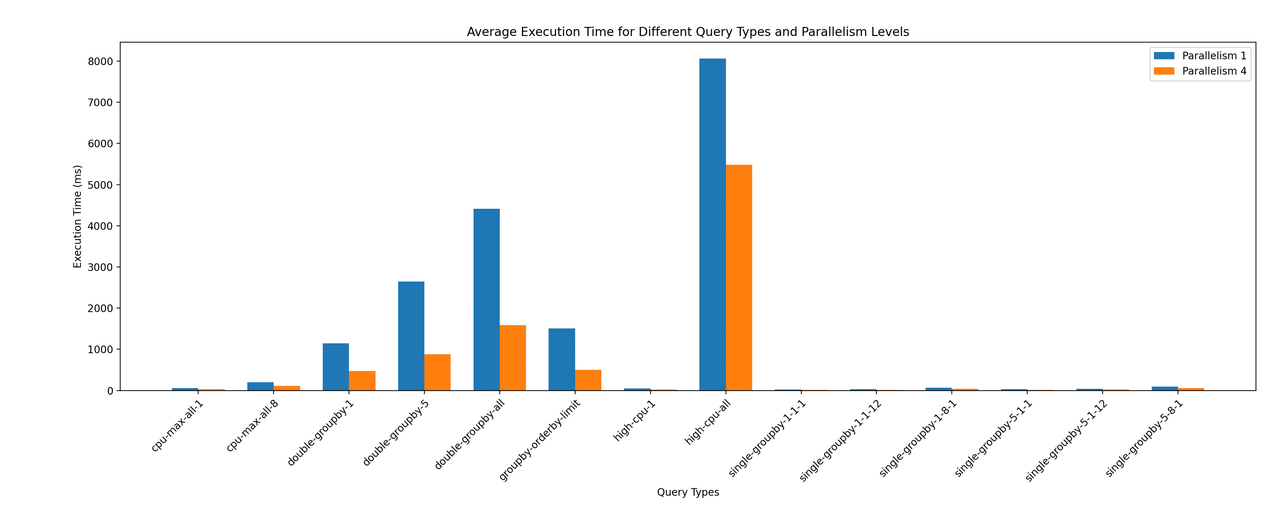

The Mito engine now features a new row group level page cache, proven to reduce scan times by an average of 20% to 30%. Enhancements also include parallel scanning capabilities for both SST and Memtable. These upgrades have notably boosted GreptimeDB's scanning efficiency in the TSBS Benchmark, with certain scenarios experiencing performance improvements of up to 200%.

Specifying Different Storage Backends When Creating Tables Previously limited to a single storage backend for tables, GreptimeDB now offers the flexibility to configure various storage backends at startup. This enhancement enables the assignment of distinct storage backends to tables upon their creation. For instance, it's now possible to store one table locally and another on S3.

Support for Nested Range Expressions Range expressions like

max(a+1) Range '5m' FILL NULLcan now be nested within any expression. For instance:

Select

round(max(a+1) Range '5m' FILL NULL),

sin((max(a) + 1) Range '5m' FILL NULL),

from

test

ALIGN '1h' by (b) FILL NULL;This SQL query is semantically equivalent to:

Select round(x), sin(y + 1) from

(

select max(a+1) Range '5m' FILL NULL as x, max(a) Range '5m' FILL NULL as y

from

test

ALIGN '1h' by (b) FILL NULL;

)

;- Added the

ALIGN TOclause and supportedIntervalqueries.

Interval expressions

Interval expressions can be used as an optional duration string replacement, following the RANGE and ALIGN keywords.

SELECT

rate(a) RANGE (INTERVAL '1 year 2 hours 3 minutes')

FROM

t

ALIGN (INTERVAL '1 year 2 hours 3 minutes')

FILL NULL;ALIGN TO clause

Users can use the ALIGN TO clause to align time to their desired point.

SELECT rate(a) RANGE '6m' FROM t ALIGN '1h' TO '2021-07-01 00:00:00' by (a, b) FILL NULL;The available ALIGN TO options include:

- Calendar (default): Align to the UTC timestamp of 0.

- Now: Align to the current UTC timestamp.

- Timestamp: Align to a specific timestamp specified by the user.

Future Plans

Building on the foundation of Remote WAL, we plan to implement Region Migration in GreptimeDB, aiming to facilitate load balancing. Initially, Region Migration will be manually triggered through a control API, with future developments aiming at automatic load balancing scheduling.

Continuous optimization of the core Mito Engine (which also forms the basis for the Metrics Engine) is ongoing, with a focus on enhancing query performance and reducing data transfer latency with object storage.

Another significant feature in development is the inverted index, which is expected to substantially improve query performance in certain scenarios. We aim to release this feature by the end of January, and updates on its progress can be found here.

References: [1] https://github.com/influxdata/rskafka

[2] https://github.com/GreptimeTeam/greptimedb/issues/1042

[3] https://github.com/orgs/GreptimeTeam/projects/38/views/2